Store of the Future

A re-imagining of the athleisure retail experience to be more intuitive, personalized, and streamlined

Client: Lululemon

Technology:

- FITS

- Magic Mirror - 2-way mirror, display, proof of concept Node.js web app, WebSockets, commercial-grade RFID reader, PC

- Ladder - force-sensing resistors, Arduino+WiFi shield, Hue bulb with Hue Bridge

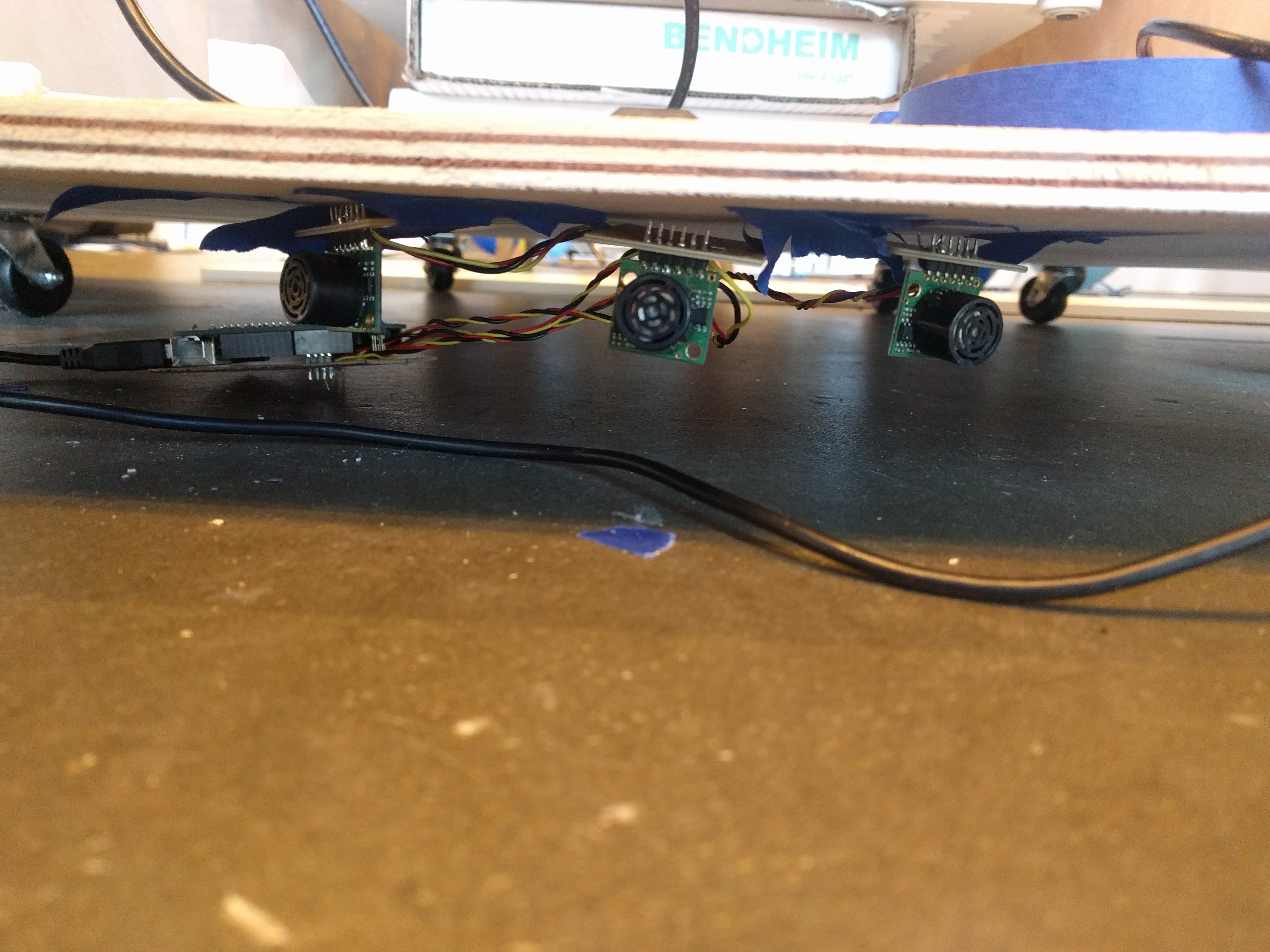

- STORY ARCS - Maxbotix ultrasonic sensors (3 per Arc), small form-factor projector & computer

- SWITCHABLE FILM - SmartTint PDLC switchable film

Role: Developer

Second Story designed and prototyped two experiences for a “store of the future” concept. We built the prototypes in our lab with working technology, but ultimately Lululemon never implemented the experience onsite in their stores. In addition to the two experiences described below, we also experimented with using switchable film to create an animated storefront facade.

FITS was an interactive fitting room with a reactive “magic mirror” as well as a 2-rung smart ladder on which guests would hang clothes they brought into the fitting room, with each rung designated as “Love It” or “Hate It”. The ladder used force-sensing resistor (FSR) sensors to detect whether there were clothes on each rung. The mirror contained an RFID reader to detect which items were present, and the content of the mirror changed according to which items were present in the fitting room. In addition, a Philips Hue bulb served as an indicator light on the outside of the fitting room, to show store attendants the current status of the guest in the room.

STORY ARCS was a group of 3 store displays - each holding 3 mannequins and with a translucent acrylic projection surface on the back. Each ARC had 3 ultrasonic proximity sensors mounted to the bottom of the structure and connected to an Arduino, which would trigger content projected from a small-form-factor projector whenever a guest walked by the ARC. The projection was mapped to the form of the mannequin and projection surface behind it.

On this project, I was responsible for R&D and prototyping for the main technological elements of each experience. For the storefront facade experience, I researched different switchable film brands and finally landed on Smart Tint. I experimented with cutting the Smart Tint tiles into different shapes and applying different amounts of current to test what kinds of patterns, configurations, and animations of opacity could be created.

On FITS, I built the POC web app for the magic mirror with Node.js, which communicates via WebSockets between the RFID reader, the FSR sensor and connected Arduino+WiFi shield, and the Hue Light Bulb & Bridge. I tested different types of force, weight, and object detection sensors to determine which would be the best for the physical design and technical capability requirements for the ladder, and tested the selected FSR sensor on different physical shapes and textures and in different positions and orientations to narrow down our physical design options. I also spec-ed our RFID reader based on physical and technological requirements. I tested different reading distances for the RFID reader and data transmission distances for the Hue Bridge to inform the dimensions of the fitting room and positions of the experience hardware elements in the space. Finally, I worked with our physical designer to build a working proof of concept physical prototype of the magic mirror with working app and RFID reader, Hue bulb indicator light, and FSR-enabled ladder.

For STORY ARCS, I researched and tested different kinds of motion-detection sensors and after landing on ultrasonic, I tested sensors with a range of detection zone sizes to determine the best model for our use case. I worked with the physical designer to choose the optimal mounting location on the STORY ARC structure for the ultrasonic sensor and helped inform the design of an enclosure for the sensor as well. I tested projection locations on the STORY ARC structure to determine the position with the maximum projection coverage and clarity. Finally, I built a POC Node.js application to trigger projected video content based on data from the sensor sent from the Arduino. (Mudit handled projection mapping and which content was triggered/on which ARC).

After the project ended, I repurposed the mannequin and projection technology as part of a haunted house for kids in our lab space, as seen below: